上一篇介绍的基本的使用方式,自由度很高,但是编写的代码相对就多了。而我所在的行业其实大部分都是定题爬虫, 只需要采集指定的页面并结构化数据。为了提高开发效率, 我实现了利用实体配置的方式来实现爬虫

创建 Console 项目

利用NUGET添加包DotnetSpider2.Extension

定义配置式数据对象

- 数据对象必须继承 SpiderEntity

- EntityTableAttribute中可以定义数据名称、表名及表名后缀、索引、主键或者需要更新的字段

- EntitySelector 定义从页面数据中抽取数据对象的规则

- TargetUrlsSelector定义符合规则(正则)的目标链接, 用于加入到队列中

定义一个原始的数据对象类

public class Product : SpiderEntity

{

}

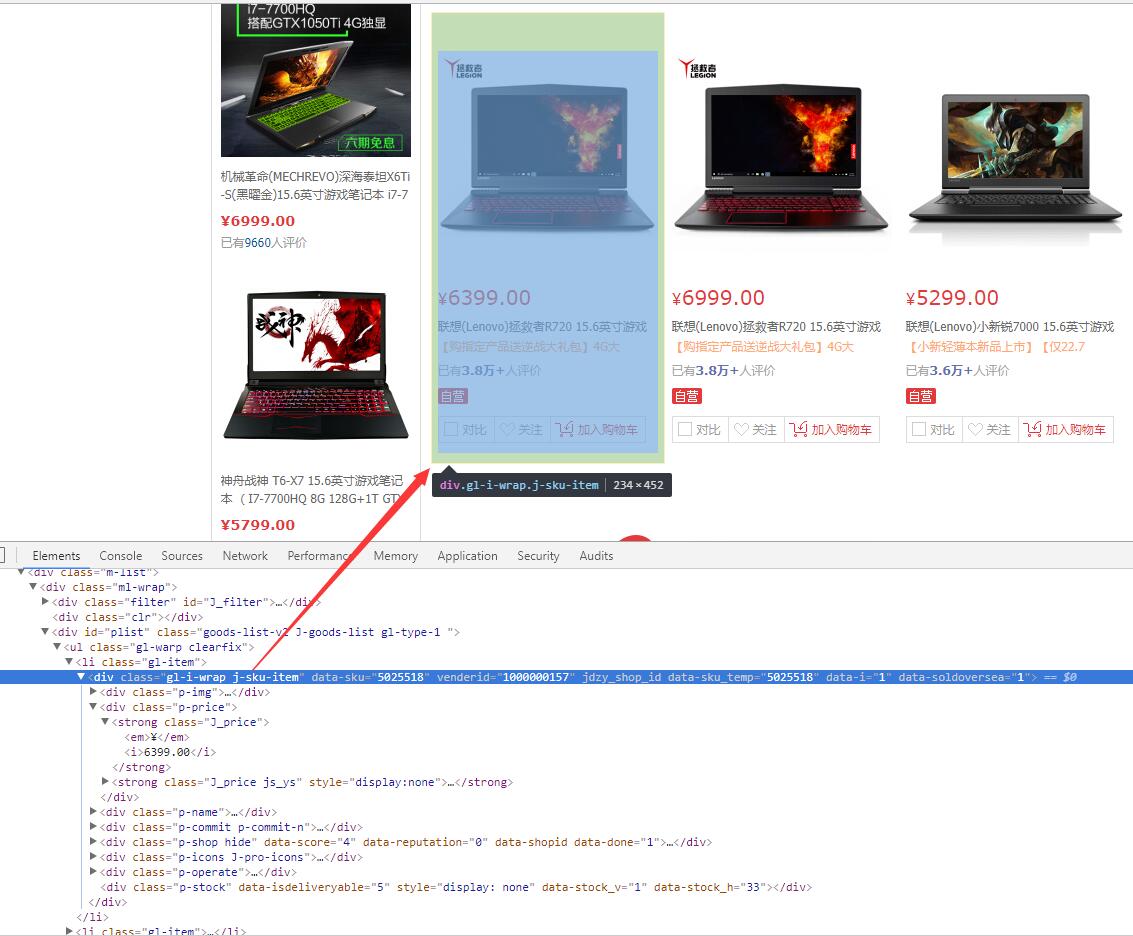

使用Chrome打开京东商品页 http://list.jd.com/list.html?cat=9987,653,655&page=2&JL=6_0_0&ms=5#J_main

- 使用快捷键F12打开开发者工具

- 选中一个商品,并观察Html结构

可以看到每个商品都在class为gl-i-wrap j-sku-item的DIV下面,因此添加EntitySelector到数据对象Product的类名上面。( XPath的写法不是唯一的,不熟悉的可以去W3CSCHOLL学习一下, 框架也支持使用Css甚至正则来选择出正确的Html片段)。

[EntitySelector(Expression = "//li[@class='gl-item']/div[contains(@class,'j-sku-item')]")]

public class Product : SpiderEntity

{

}

-

添加数据库及索引信息

[EntityTable("test", "sku", EntityTable.Monday, Indexs = new[] { "Category" }, Uniques = new[] { "Category,Sku", "Sku" })] [EntitySelector(Expression = "//li[@class='gl-item']/div[contains(@class,'j-sku-item')]")] -

假设你需要采集SKU信息,观察HTML结构,计算出相对的XPath, 为什么是相对XPath?因为EntitySelector已经把HTML截成片段了,内部的Html元素查询都是相对于EntitySelector查询出来的元素。最后再加上数据库中列的信息

[EntityTable("test", "sku", EntityTable.Monday, Indexs = new[] { "Category" }, Uniques = new[] { "Category,Sku", "Sku" })] [EntitySelector(Expression = "//li[@class='gl-item']/div[contains(@class,'j-sku-item')]")] public class Product : SpiderEntity { [PropertyDefine(Expression = "./@data-sku")] public string Sku { get; set; } }

-

爬虫内部,链接是通过Request对象来存储信息的,构造Request对象时可以添加额外的属性值,这时候允许数据对象从Request的额外属性值中查询数据

[EntityTable("test", "sku", EntityTable.Monday, Indexs = new[] { "Category" }, Uniques = new[] { "Category,Sku", "Sku" })] [EntitySelector(Expression = "//li[@class='gl-item']/div[contains(@class,'j-sku-item')]")] public class Product : SpiderEntity { [PropertyDefine(Expression = "./@data-sku")] public string Sku { get; set; } [PropertyDefine(Expression = "name", Type = SelectorType.Enviroment)] public string Category { get; set; } }

配置爬虫(继承EntitySpider)

public class JdSkuSampleSpider : EntitySpider

{ public JdSkuSampleSpider() : base("JdSkuSample", new Site

{ //HttpProxyPool = new HttpProxyPool(new KuaidailiProxySupplier("快代理API")) })

{

} protected override void MyInit(params string[] arguments)

{

Identity = Identity ?? "JD SKU SAMPLE";

ThreadNum = 1; // dowload html by http client

Downloader = new HttpClientDownloader(); // storage data to mysql, default is mysql entity pipeline, so you can comment this line. Don't miss sslmode.

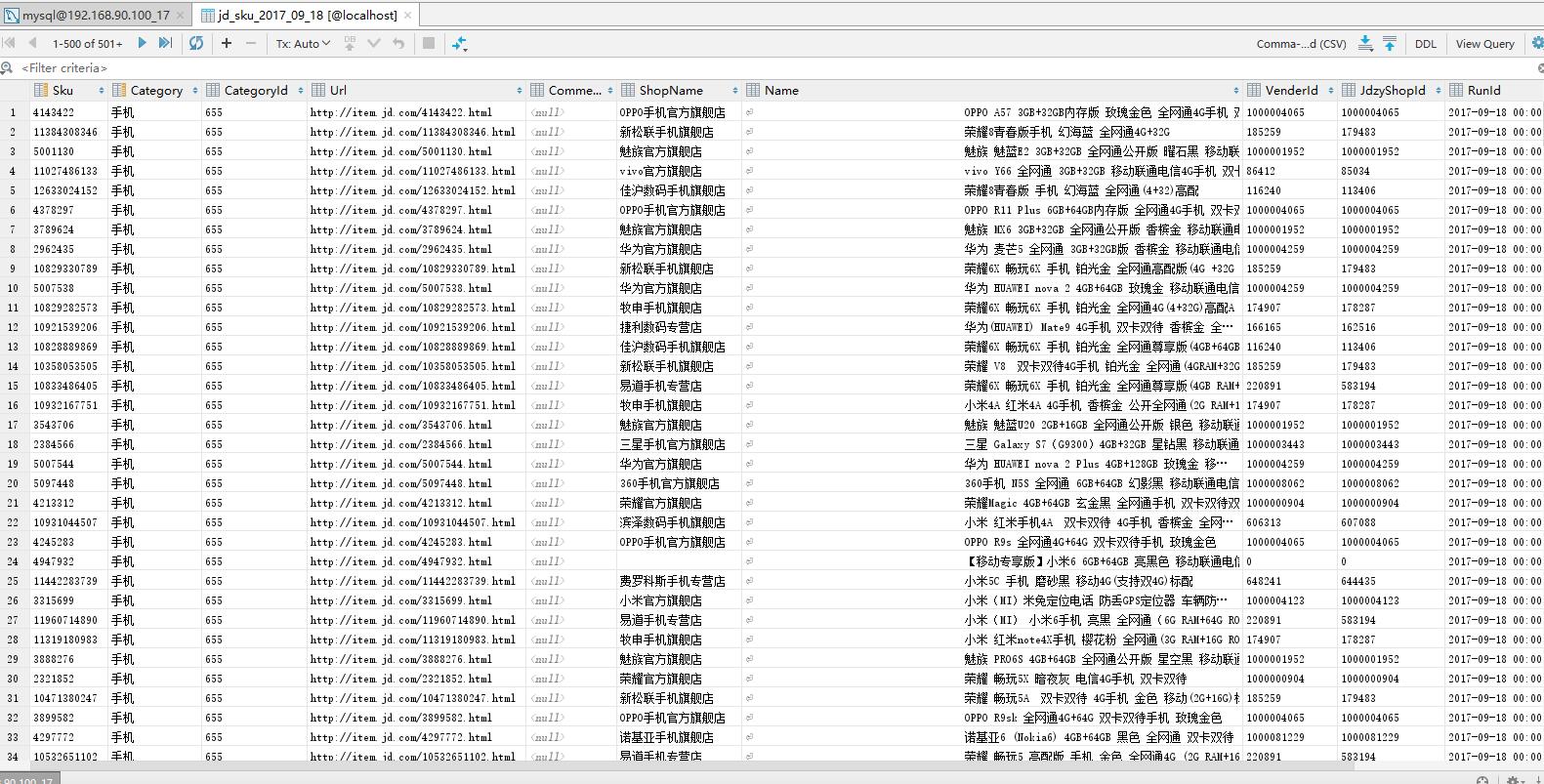

AddPipeline(new MySqlEntityPipeline("Database='mysql';Data Source=localhost;User ID=root;Password=;Port=3306;SslMode=None;"));

AddStartUrl("http://list.jd.com/list.html?cat=9987,653,655&page=2&JL=6_0_0&ms=5#J_main", new Dictionary<string, object> { { "name", "手机" }, { "cat3", "655" } });

AddEntityType<Product>();

}

}

-

其中AddStartUrl第二个参数Dictionary<string, object>就是用于Enviroment查询的数据

-

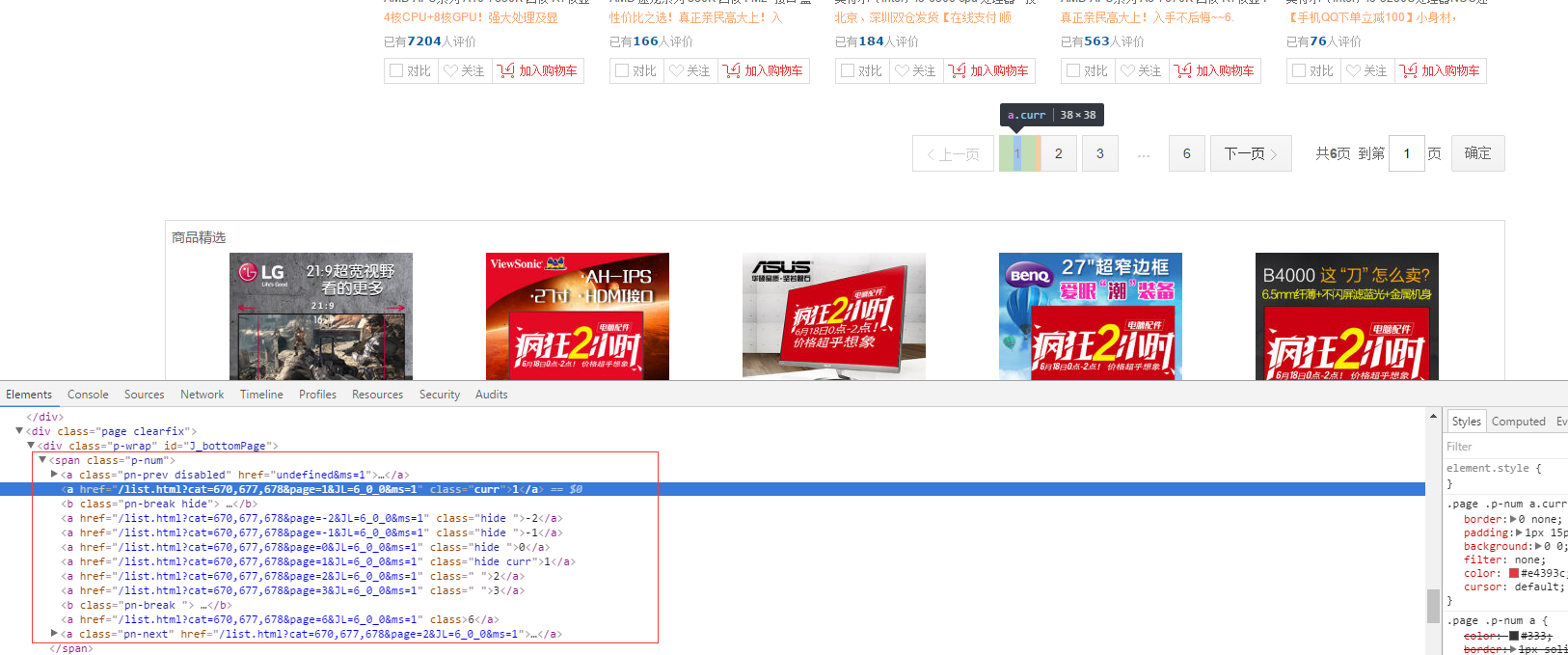

TargetUrlsSelector,可以配置数据链接的合法性验证,以及目标URL的获取。如下表示目标URL的获取区域是由XPATH选择,并且要符合正则表达式 &page=[0-9]+&

[EntityTable("test", "jd_sku", EntityTable.Monday, Indexs = new[] { "Category" }, Uniques = new[] { "Category,Sku", "Sku" })] [EntitySelector(Expression = "//li[@class='gl-item']/div[contains(@class,'j-sku-item')]")] [TargetUrlsSelector(XPaths = new[] { "//span[@class=\"p-num\"]" }, Patterns = new[] { @"&page=[0-9]+&" })] public class Product : SpiderEntity { [PropertyDefine(Expression = "./@data-sku")] public string Sku { get; set; } [PropertyDefine(Expression = "name", Type = SelectorType.Enviroment)] public string Category { get; set; } }

-

添加一个MySql的数据管道,只需要配置好连接字符串即可

context.AddPipeline(new MySqlEntityPipeline("Database='test';Data Source=localhost;User ID=root;Password=1qazZAQ!;Port=3306"));

完整代码

public class JdSkuSampleSpider : EntitySpider

{

public JdSkuSampleSpider() : base("JdSkuSample", new Site

{

//HttpProxyPool = new HttpProxyPool(new KuaidailiProxySupplier("快代理API")) })

{

}

protected override void MyInit(params string[] arguments)

{

Identity = Identity ?? "JD SKU SAMPLE";

ThreadNum = 1;

// dowload html by http client

Downloader = new HttpClientDownloader();

// storage data to mysql, default is mysql entity pipeline, so you can comment this line. Don't miss sslmode.

AddPipeline(new MySqlEntityPipeline("Database='mysql';Data Source=localhost;User ID=root;Password=;Port=3306;SslMode=None;"));

AddStartUrl("http://list.jd.com/list.html?cat=9987,653,655&page=2&JL=6_0_0&ms=5#J_main", new Dictionary<string, object> { { "name", "手机" }, { "cat3", "655" } });

AddEntityType<Product>();

}

}

[EntityTable("test", "jd_sku", EntityTable.Monday, Indexs = new[] { "Category" }, Uniques = new[] { "Category,Sku", "Sku" })]

[EntitySelector(Expression = "//li[@class='gl-item']/div[contains(@class,'j-sku-item')]")]

[TargetUrlsSelector(XPaths = new[] { "//span[@class=\"p-num\"]" }, Patterns = new[] { @"&page=[0-9]+&" })]

public class Product : SpiderEntity

{

[PropertyDefine(Expression = "./@data-sku", Length = 100)]

public string Sku { get; set; }

[PropertyDefine(Expression = "name", Type = SelectorType.Enviroment, Length = 100)]

public string Category { get; set; }

[PropertyDefine(Expression = "cat3", Type = SelectorType.Enviroment)]

public int CategoryId { get; set; }

[PropertyDefine(Expression = "./div[1]/a/@href")]

public string Url { get; set; }

[PropertyDefine(Expression = "./div[5]/strong/a")]

public long CommentsCount { get; set; }

[PropertyDefine(Expression = ".//div[@class='p-shop']/@data-shop_name", Length = 100)]

public string ShopName { get; set; }

[PropertyDefine(Expression = ".//div[@class='p-name']/a/em", Length = 100)]

public string Name { get; set; }

[PropertyDefine(Expression = "./@venderid", Length = 100)]

public string VenderId { get; set; }

[PropertyDefine(Expression = "./@jdzy_shop_id", Length = 100)]

public string JdzyShopId { get; set; }

[PropertyDefine(Expression = "Monday", Type = SelectorType.Enviroment)]

public DateTime RunId { get; set; }

}

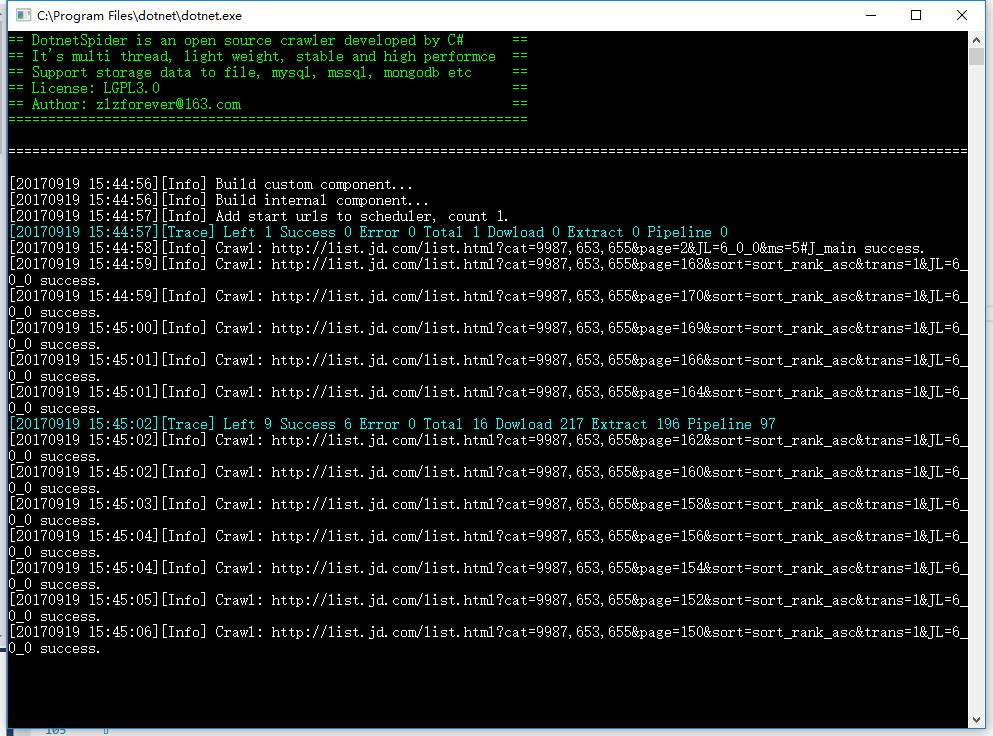

运行爬虫

public class Program

{

public static void Main(string[] args)

{

JdSkuSampleSpider spider = new JdSkuSampleSpider();

spider.Run();

}

}

不到57行代码完成一个爬虫,是不是异常的简单?